Can AI Agents Mimic a Superforecasting Team?

Exploring whether AI agents can recreate the cognitive diversity and decentralization that makes great forecasters so effective.

In Superforecasting, Philip Tetlock makes a humbling observation: most of us are much worse forecasters than we believe. The cure, he argues, is not brilliance. It is the disciplined use of cognitive diversity and constant self-correction. Michael Mauboussin’s More Than You Know echoes this from the perspective of investing. The best investors draw from multiple disciplines so they can see problems from more than one angle. They mix the outside view, anchored in base rates, with the inside view shaped by their own experience.

Tetlock uses the image of a dragonfly eye. Each facet of the eye captures a slightly different glimpse of the same object. When combined, the full picture becomes sharper and more accurate. Mauboussin arrives at the same idea through two practical tools. First, start with base rates, a point borrowed from Kahneman. Second, push decision-making toward decentralization so the group does not fall into the same trap at the same time.

For an individual investor, achieving this level of cognitive diversity is difficult. You can read widely and build what Charlie Munger calls a latticework of mental models. You can train yourself to switch between frameworks. But your line of sight will always be shaped by your history, your habits, and your priors. No one person can replicate the breadth of perspective of a strong forecasting team.

That led me to two questions. Could AI give an individual investor access to something resembling a team? Can AI become more useful R2D2-like assistants that does more than just provide basic summarization?

Building a “Digital” Superforecasting Team

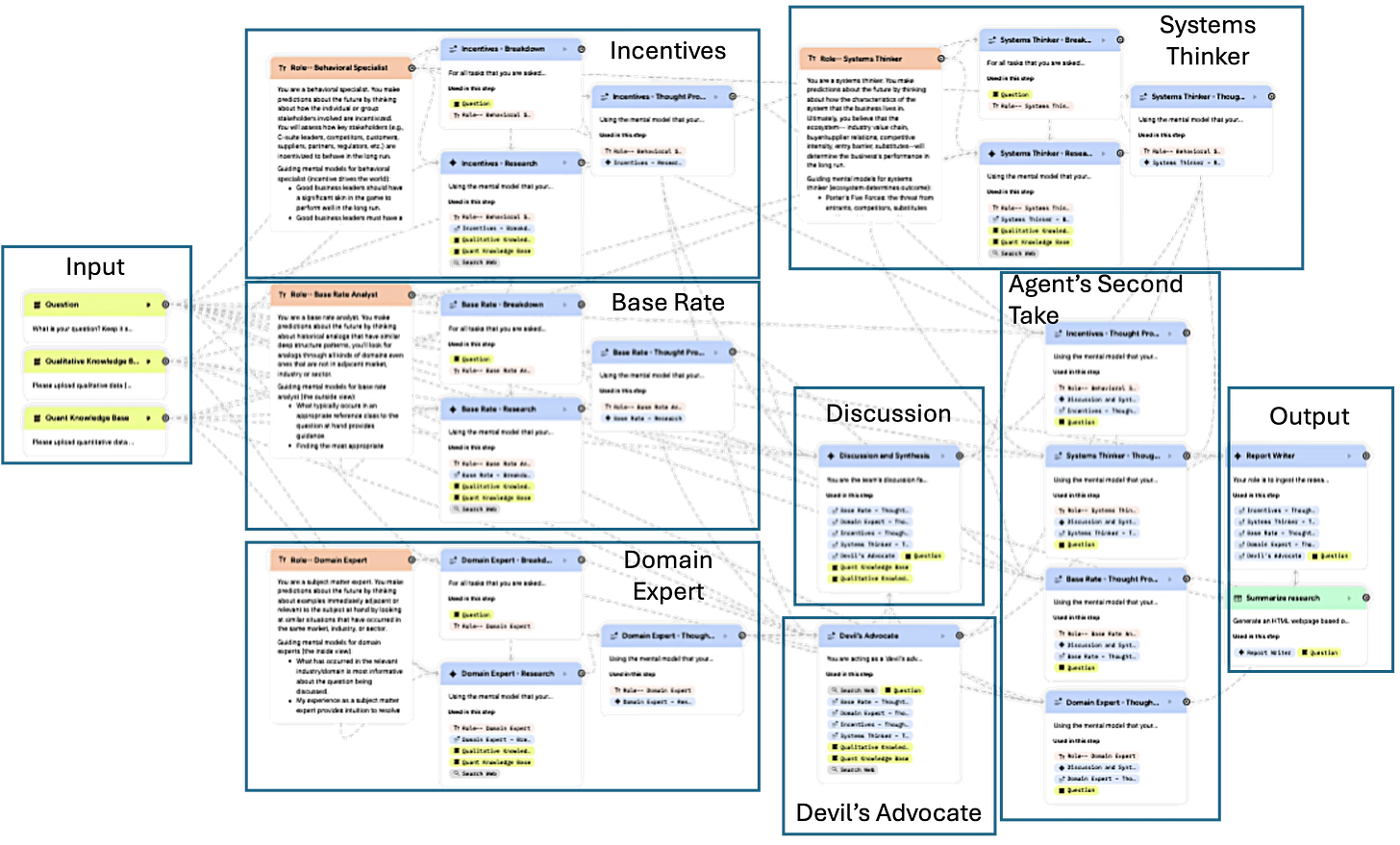

With AI agents now easy for non-technical users to build, I decided to try creating a small investment “team” composed of agents with different worldviews.

I started with five roles.

Outside-View Analyst: focuses on identifying appropriate reference class and its base rates (e.g., average growth rate of companies with similar size) to provide historical context.

Inside-View Specialist: leans on analyzing the business through the perspective on domain/industry-specific knowledge (e.g., this industry’s historical performance, competitor’s profit margin).

Systems thinker: focused on understanding the business’ surrounding ecosystem, value chain, and how key stakeholders interact with it.

Incentives analyst: applies a behavioral lens on the management team, particularly the compensation structure, ethical track record, and stock ownership.

Devil’s Advocate: examines other agent’s work and looks for holes, contradictions, and weak assumptions.

Each agent researches the same company independently and forms its own thesis. Only after this first step do they “meet” to compare notes, critique one another, and revise their thinking. The dynamic is meant to imitate how Tetlock’s superforecasters iterated toward better answers: independent views first, constructive debate second, convergence last.

Here’s roughly what the workflow looks like:

The results were mixed. The team still made odd mistakes—for example, the agents mistakenly concluded that Medpace’s revenue concentration is “highly concentrated” because 90% of revenue comes from small-mid biotechs. I’m pretty sure there is more than one small-mid biotech company in the US.

However, the underlying concept feels promising. When I examined under the hood to see how each agent approached the task, their thought processes were genuinely distinct from each other. The problems emerged when I forced them into premature consensus. At that point, the quality of the analysis collapsed into generic AI output.

There’s still work to do, but the early signals suggest that a well-designed multi-agent structure can create something closer to true cognitive diversity.

Where the Prototype Started to Strain

There was one limitation that emerged almost immediately. When the agents conducted “deep research,” they kept pulling from the same public sources. That is simply a consequence of running on the same LLM with access to the same websites. Even though the frameworks differed, the inputs often converged.

To get around this, I had to manually supply them with richer information. This included financial statements, filings, proprietary transcripts, industry datasets, and primary research. Once this additional material was uploaded, the agents produced more distinct and more interesting views. The quality of their work was much closer to what I had hoped for.

It became clear that diversity of thinking only works if the underlying information is also diverse. Otherwise, the team looks different on the surface but ends up drinking from the same well.

Why This is Promising

Even with the gaps, the early results are encouraging. With the right structure, AI agents can behave a little like a superforecasting group. They generate independent perspectives. They challenge each other. They revise their views. And the output is usually less “generic” than any single AI’s first pass.

This experiment also opened up new questions.

What mix of mental models makes for the strongest team?

How many agents are enough, and how many are too many?

Can one agent combine multiple mental models effectively, or does that reintroduce bias?

Does a small, specialized team beat a larger, more diffuse one?

I don’t have the answers yet. But the idea that one person could simulate the strength of a well-functioning investment team feels new and exciting. Tetlock showed that good forecasting is the result of process and structure. Mauboussin showed that judgment improves when investors deliberately choose different vantage points. Munger argued for building a broad toolbox of mental models.

For the first time, technology might let an individual investor stitch these ideas together in a scalable way.